Sentiment Analysis on Movie Reviews:A predictive model with pre-trained Bert by PyTorch

In this project, I explored sentiment analysis on movie reviews using a predictive model powered by a pre-trained BERT model implemented with PyTorch. BERT’s ability to understand context makes it perfect for analyzing and predicting sentiment in movie reviews.

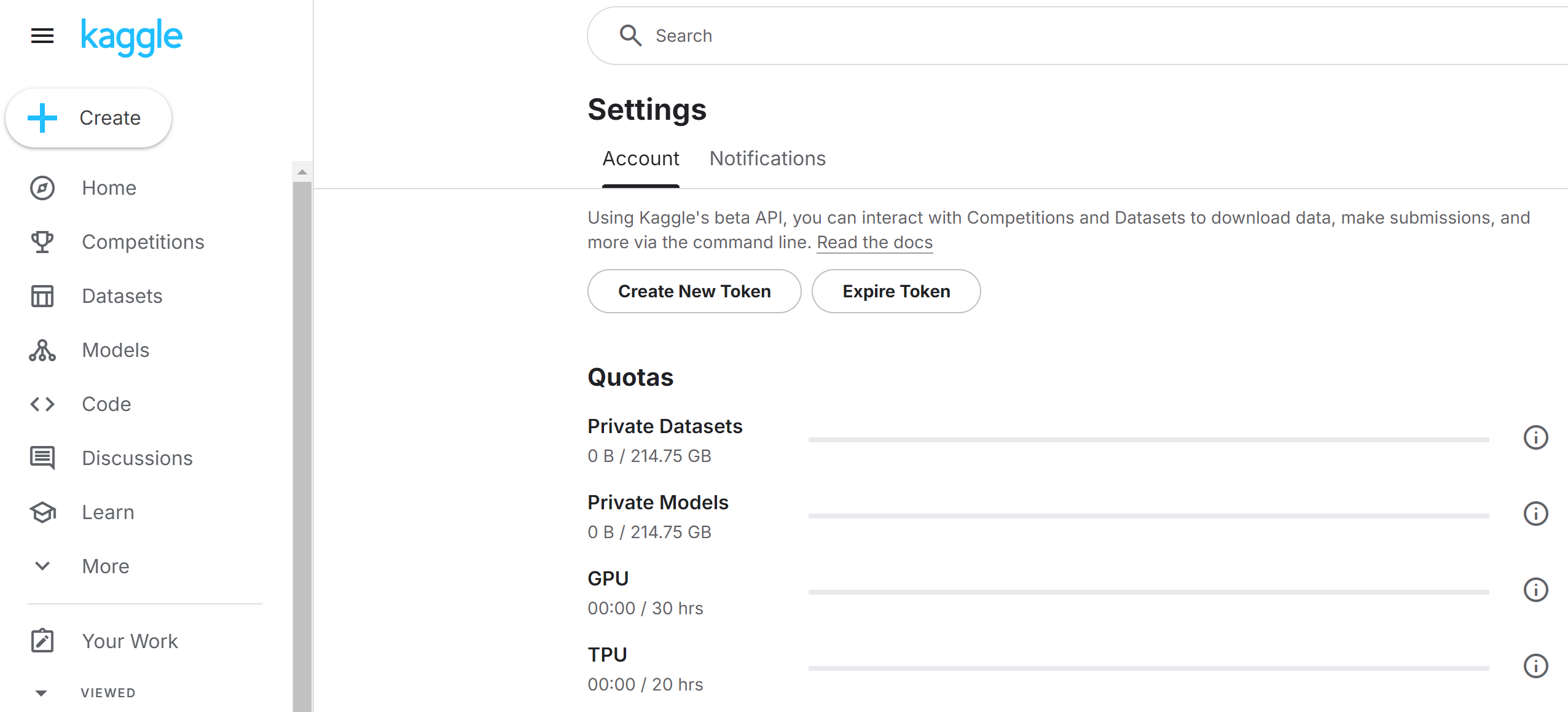

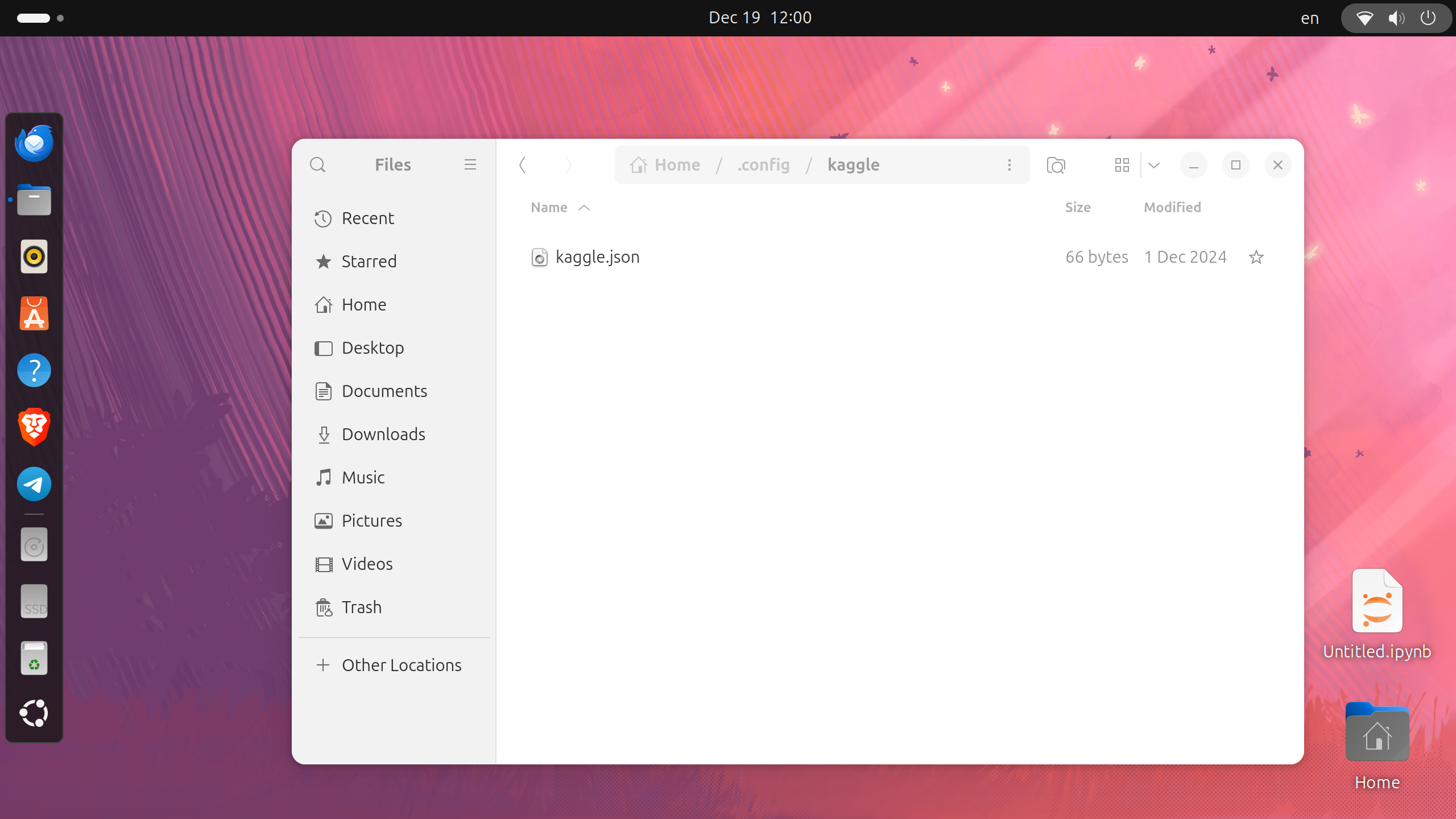

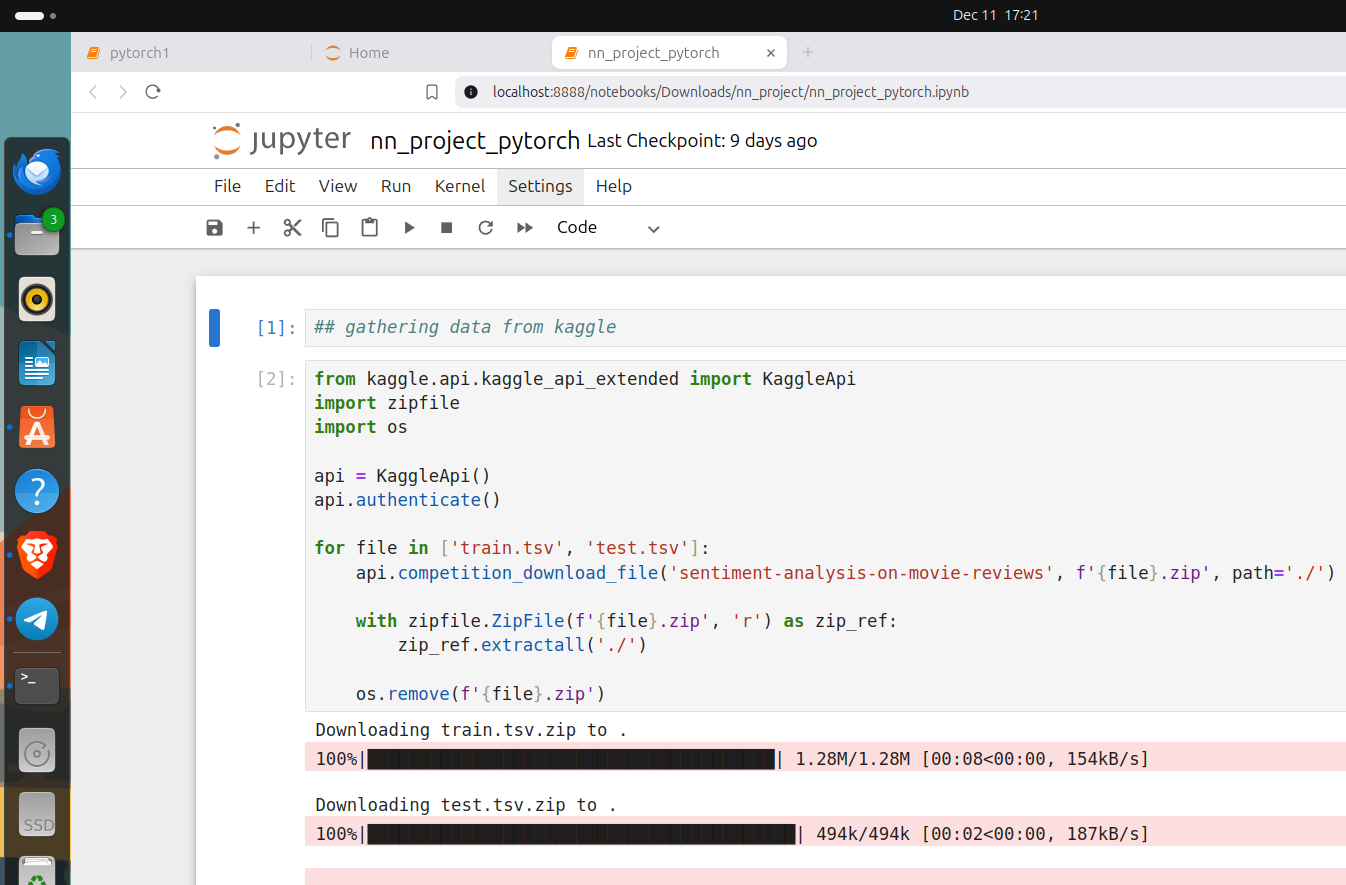

Kaggle API

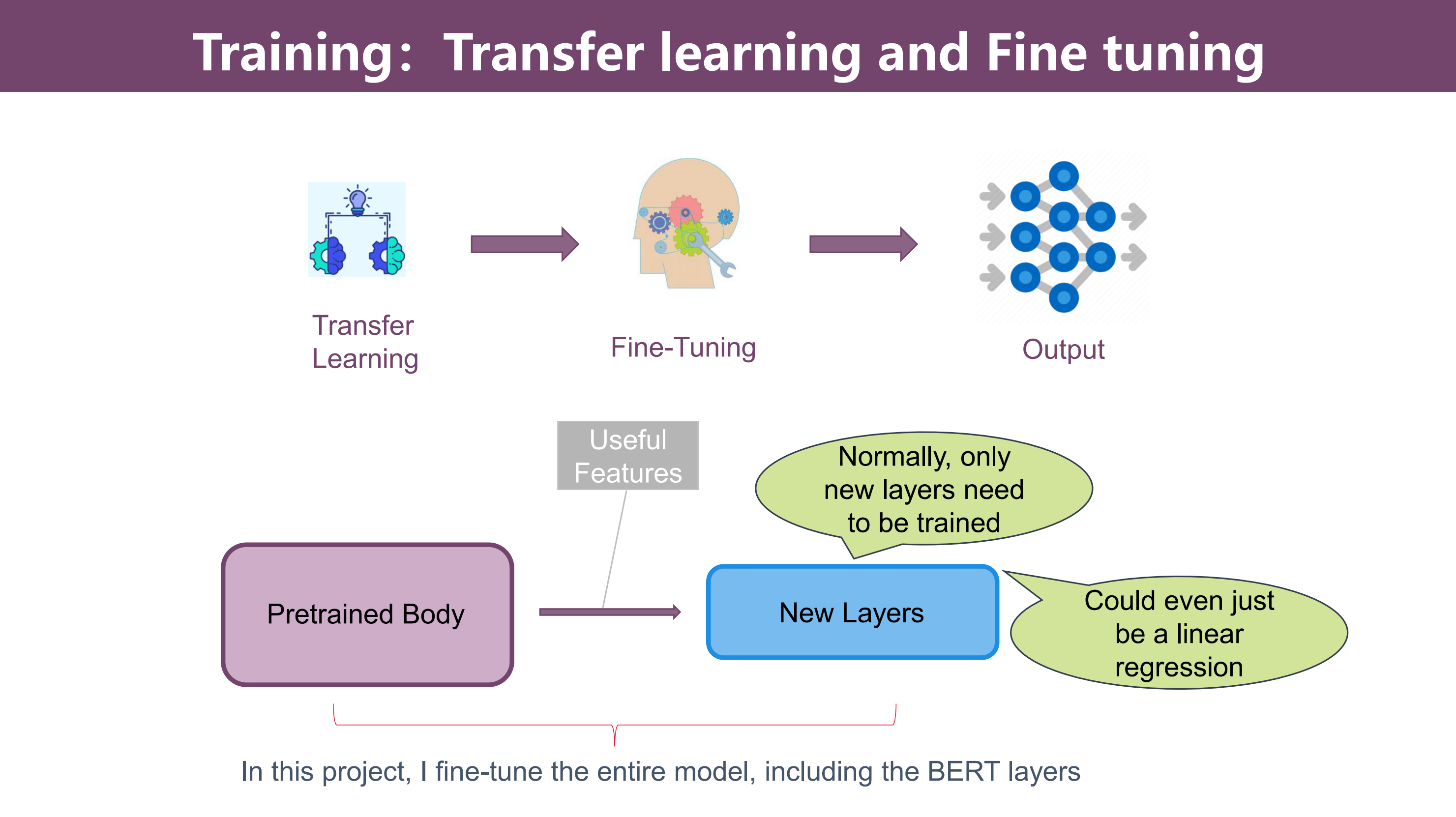

Transfer learning and fine tuning

What is Transfer learning and fine-tuning?

Transfer learning is a general machine learning technique that is not specific to transformers or their architecture. It is applied to transformers to make them practical and useful, significantly reducing the cost and effort required to train them. Without transfer learning, training a transformer would be prohibitively expensive.

Fine-tuning is a Fine-tuning is a transfer learning technique where I take a pre-trained model (like BERT, which has been trained on a large amount of general text data) and further train it on a specific task or dataset while leveraging its pre-learned knowledge.

When I first learned about transfer learning and fine-tuning, a few questions came to mind:

-

Do only the new layers need to be trained during transfer learning?

-

how can I fine-tuning the pre-trained model without training it?

-

If I fine-tune the entire model, is it still different from training the pre-trained model from scratch?

When I fine-tune the entire model, I am still leveraging the pre-trained weights and representations from the original BERT model. The key difference is that I am allowing those pre-trained layers to be updated during the fine-tuning process, rather than keeping them completely frozen.

Training the pre-trained BERT model from scratch would involve randomly initializing all the model parameters and training the entire model on a large, general-purpose dataset (like the dataset used to originally pre-train BERT) to learn the base language representations.

💡The key lies in either initializing or optimizing model parameters. I don't need to train pre-trained model since it is already trained.

Conclusion

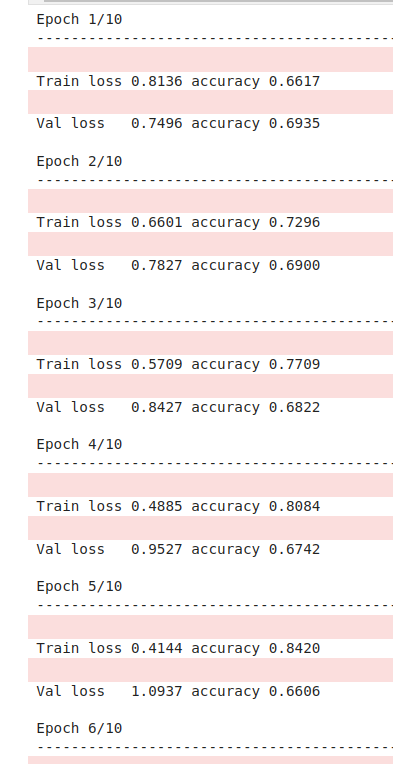

In conclusion, the demo performs well, but the accuracy is approximately 70%. There is room for improvement in the model.

1.Train more epochs to observe the result.✅

Result: Overfitting😂

Reason: I fine-tune the entire model, include pre-trained model.

How to improve: I should fine-tune a few layers of the pre-trained model first, and see the result.

2.Model comparison: compared with other pre-training models.🕚

There are many other bert or pre-trained models used for text classification in huggingface platform.

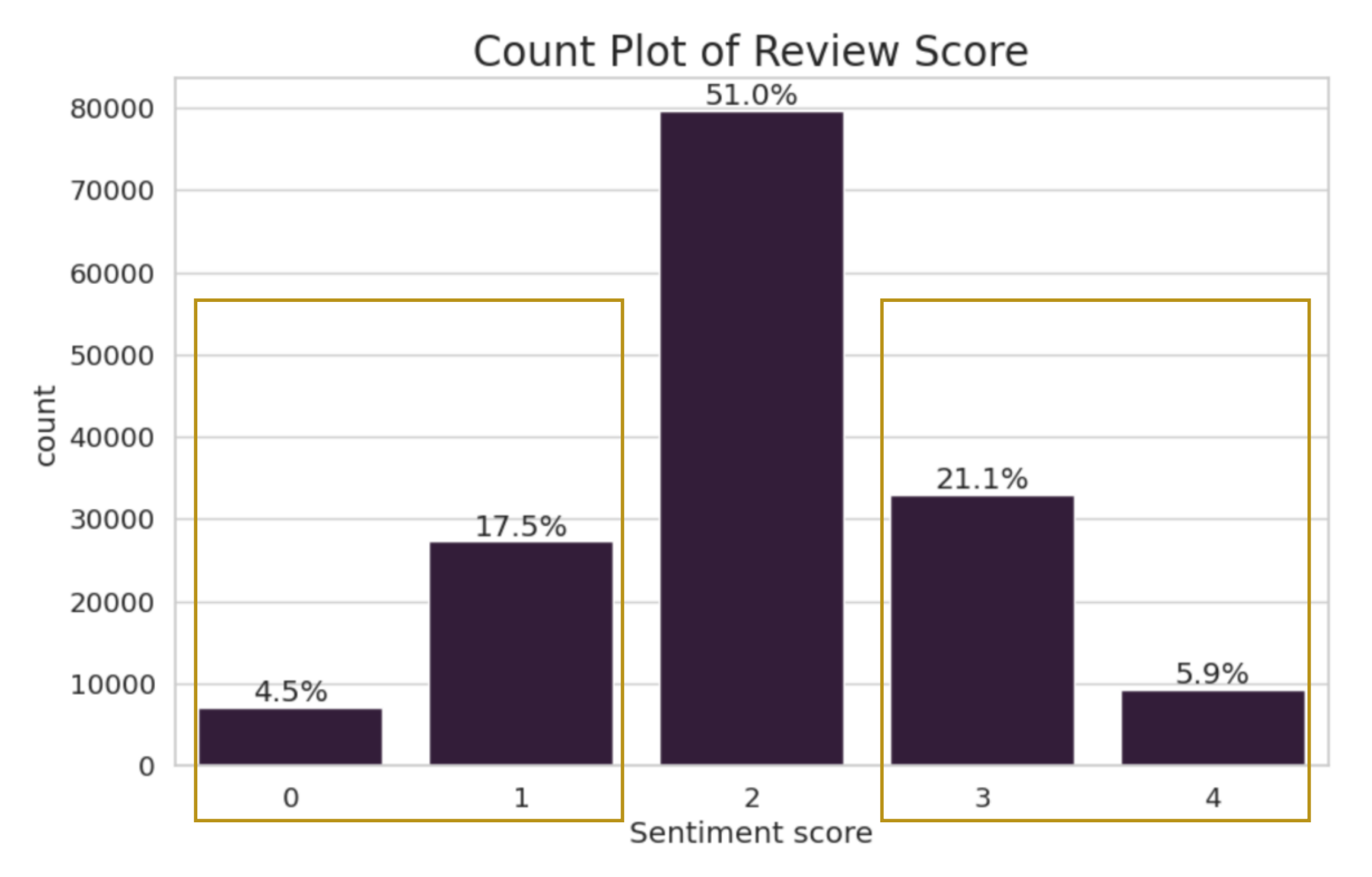

3.Data binning and Data processing: bin 0 into 1, bin 3 into 4, to make the data less imbalanced in preprocessing data.🕥

For example, we can bin the 8 clarity values into just 3 distinct buckets( In this project, I bin 0 and 1 into negative, 3 and 4 into positive.)

As we know well, Adding dummy variables(aka. one-hot encoder) is a technique as well.

💡In my opinion, adding dummy variables for each categorical column can lead to wide data sets and increase model variance; binning can solve this and improve interpretability.